Complexity Trends¶

Complexity Trends are used to get more information around our Hotspots.

Once we’ve identified a number of Hotspots, we need to understand how they evolve: are they Hotspots because they get more and more complicated over time? or is it more a question of minor changes to a stable code structure? Complexity Trends help you answer these questions.

Complexity Trends are calculated from the Evolution of a Hotspot¶

A Complexity Trend is calculated by fetching each historic version of a Hotspot and calculating the code complexity of those historic versions. The algorithm allows us to plot a trend over time as illustrated in Fig. 111.

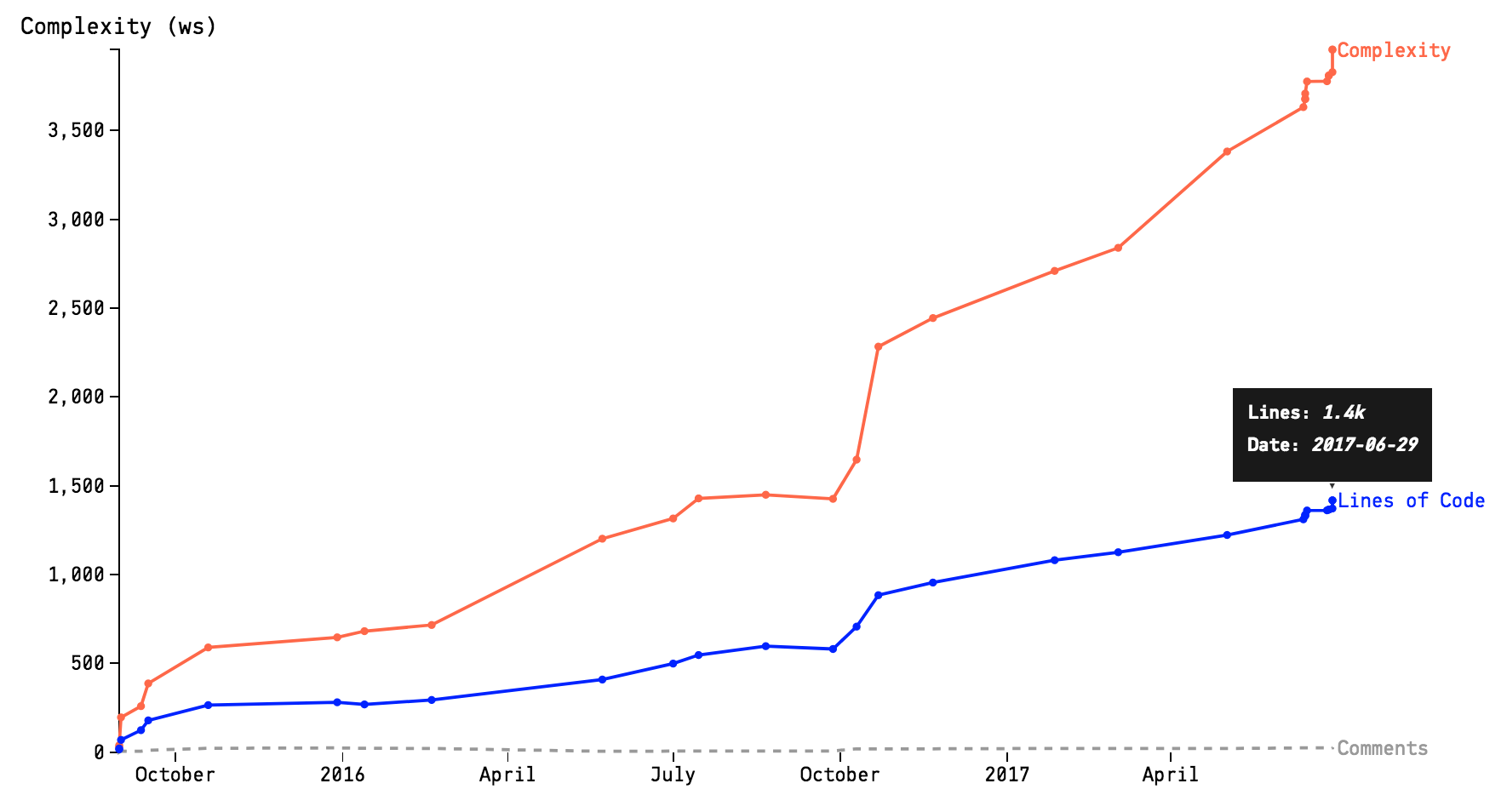

Fig. 111 A complexity trend sample.¶

The picture above shows the complexity trend of single hotspot, starting in mid 2015 and showing its evolution over the next year. It paints a worrisome picture since the complexity has started to grow rapidly.

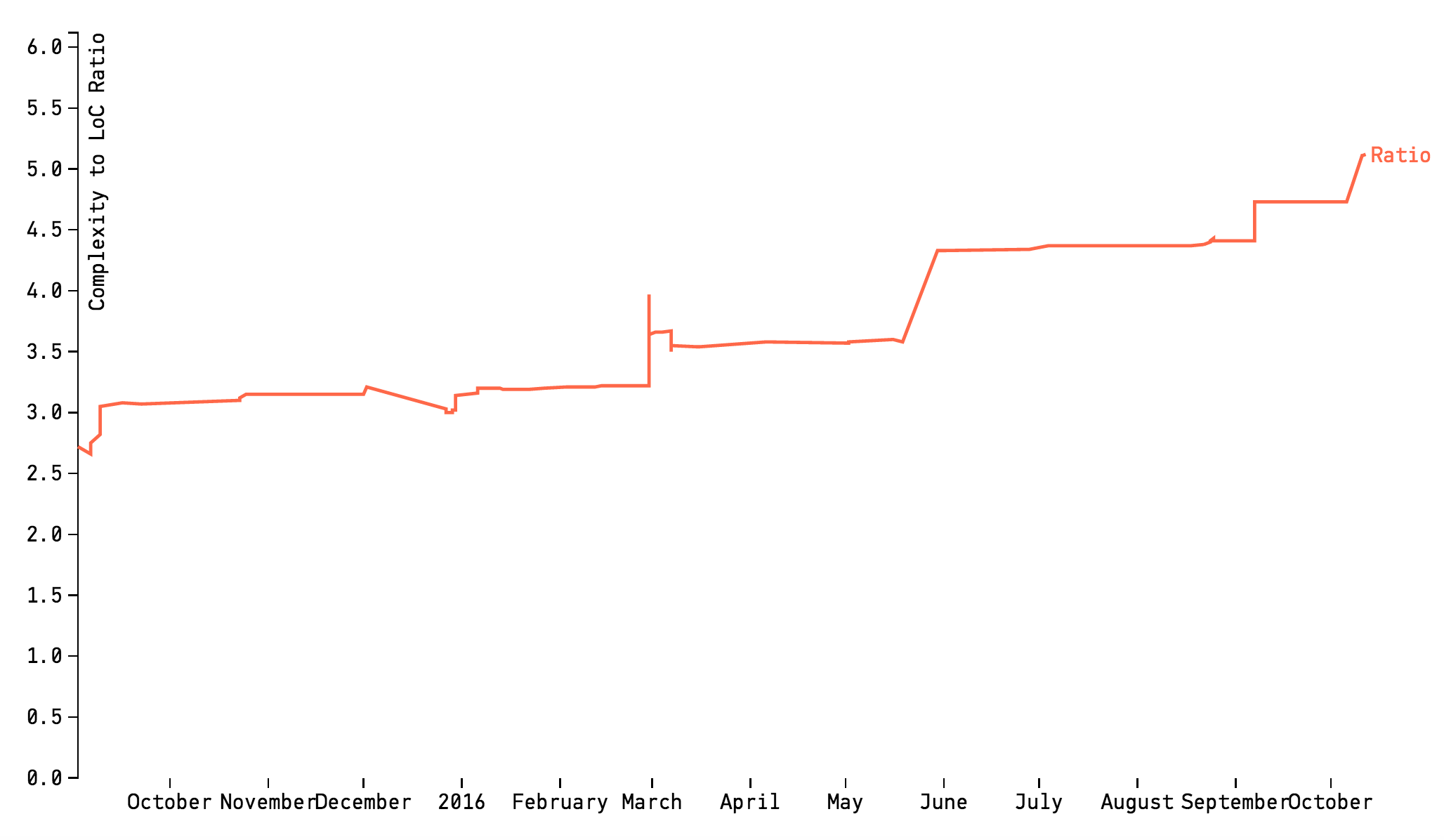

Worse, as evidenced by the Complexity/Lines of Code ratio shown in Fig. 112, the complexity grows non-linearly to the amount of new code, which indicates that the code in the hotspot is getting harder and harder to understand. You also see that the accumulation of complexity isn’t followed by any increase in descriptive comments. So if you ever needed ammunition to motivate a refactoring, well, it doesn’t get more evident than cases like this. This file looks more and more like a true maintenance problem.

Fig. 112 The ration between complexity and lines of code accumulation.¶

We’ll soon explain how we measure complexity. But let’s cover the most important aspect of Complexity Trends first. Let’s understand the kind of patterns we can expect.

Know your Complexity Trend Patterns¶

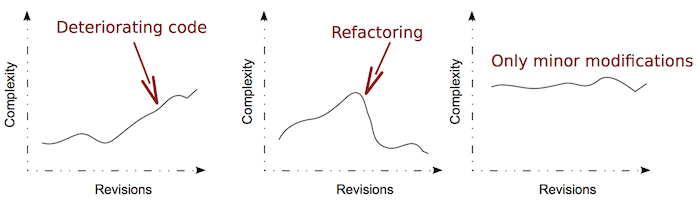

When interpreting complexity trends, the absolute numbers are the least interesting part. You want to focus on the overall shape and pattern first. Fig. 113 illustrates the shapes you’re most likely to find in a codebase.

Fig. 113 Complexity trend patterns you might find in a codebase.¶

Let’s have a more detailed look at what the three typical patterns you see above actually mean.

The Pattern for Deteriorating Code¶

The pattern to the left, Deteriorating Code, is a sign that the Hotspot needs refactoring. The code has kept accumulating complexity. Code does that in either (or both) of the following ways:

Code Accumulates Responsibilities: A common case is that new features and requirements are squeezed into an existing class or module. Over time, the unit’s cohesion drops significantly. The consequence of that for our ability to maintain the code is severe: we will now have to change the same unit of code for many different reasons. Not only does it put us at risk for unexpected feature interactions and defects, but it’s also harder to re-use the code and to modify it due to the excess cognitive load we face in a module with more or less related functionality.

Constant Modification to a Stable Structure: Another common reason that code becomes a hotspot is because of a low-quality implementation. We constantly have to re-visit the code, add an if-statement to fix some corner case and perhaps introduce that missing else-branch. Soon, the code becomes a maintenance nightmare of mythical proportions (you know, the kind of code you use to scare new recruits).

Complexity Trends let you detect these two potential problems early. Once you’ve found them, you need to refactor the code. And Complexity Trends are useful to track your improvements too. Let’s see how.

Track Improvements with Complexity Trends¶

Have one more look at the picture above. Do you see the second pattern, “Refactoring”? A downward slope in a complexity trend is a good sign. It either means that your code is getting simpler (perhaps as those nasty if-else-chains get refactored into a polymorphic solution) or that there’s less code because you extract unrelated parts into other modules.

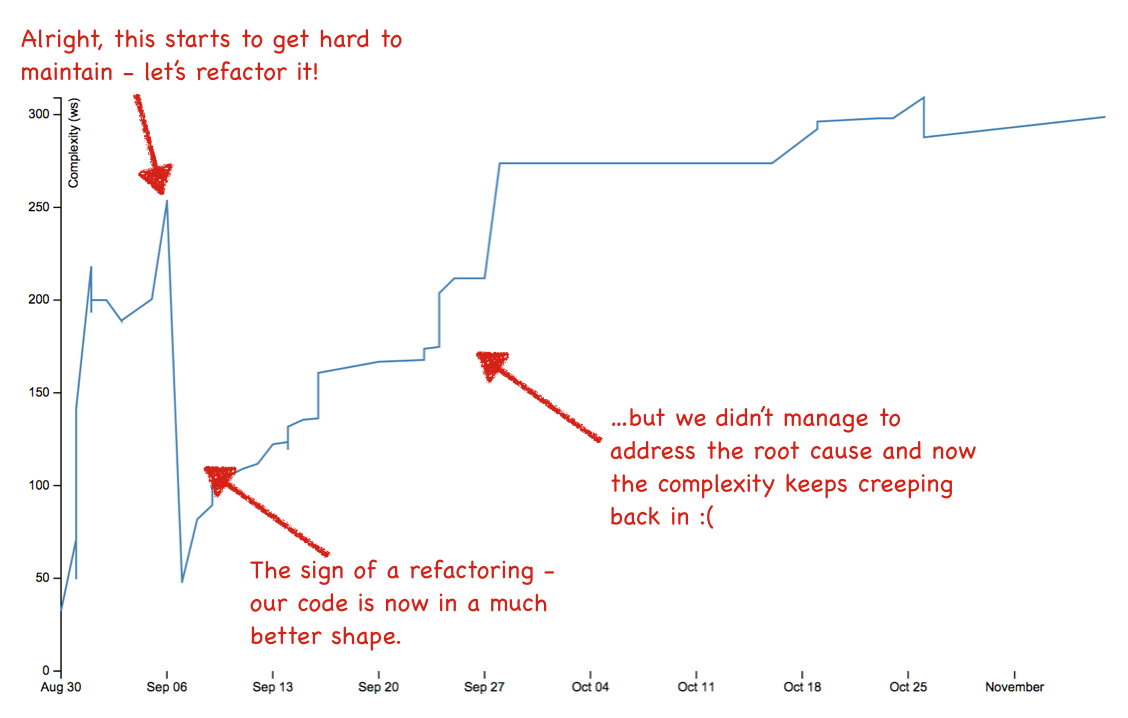

Now, please pat yourself on the back if you have the Refactoring trend in your hotspots - it’s great! But do keep exploring the complexity trends. What often happens is that we spend an awful amount of time and money on improving something, fail to address the root cause, and soon the complexity slips back in. Fig. 114 illustrates one such scary case.

Fig. 114 Example on failed refactoring.¶

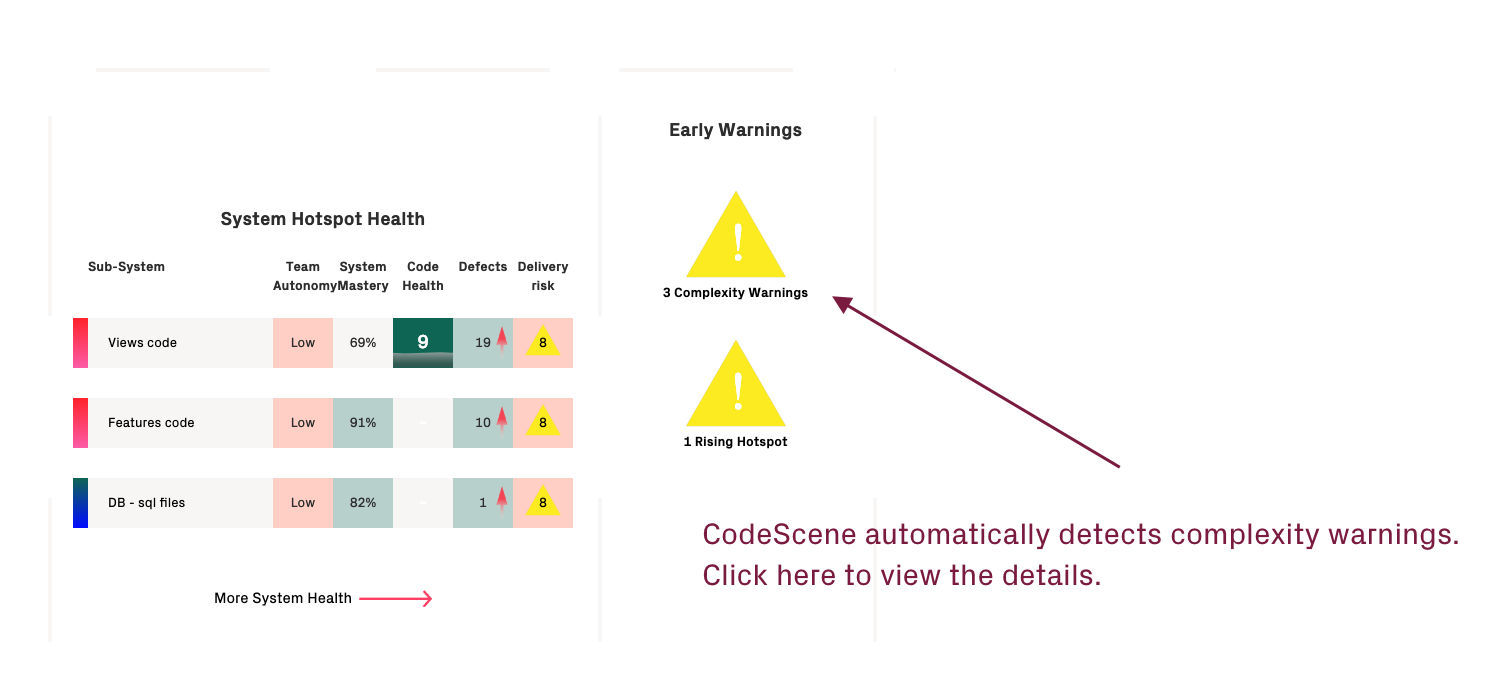

You might think this a special case. But let me assure you - during the work on these analysis techniques we analysed hundred of codebases and we found this pattern more often than not. So please, make it a habit to supervise your complexity trend; CodeScene will even do it automatically for you, as illustrated in Fig. 115. Those complexity trend warnings are triggered when the code complexity in any part of your code starts to grow at a rapid rate.

Fig. 115 CodeScene supervises your complexity trends.¶

Diff your Complexity Trends¶

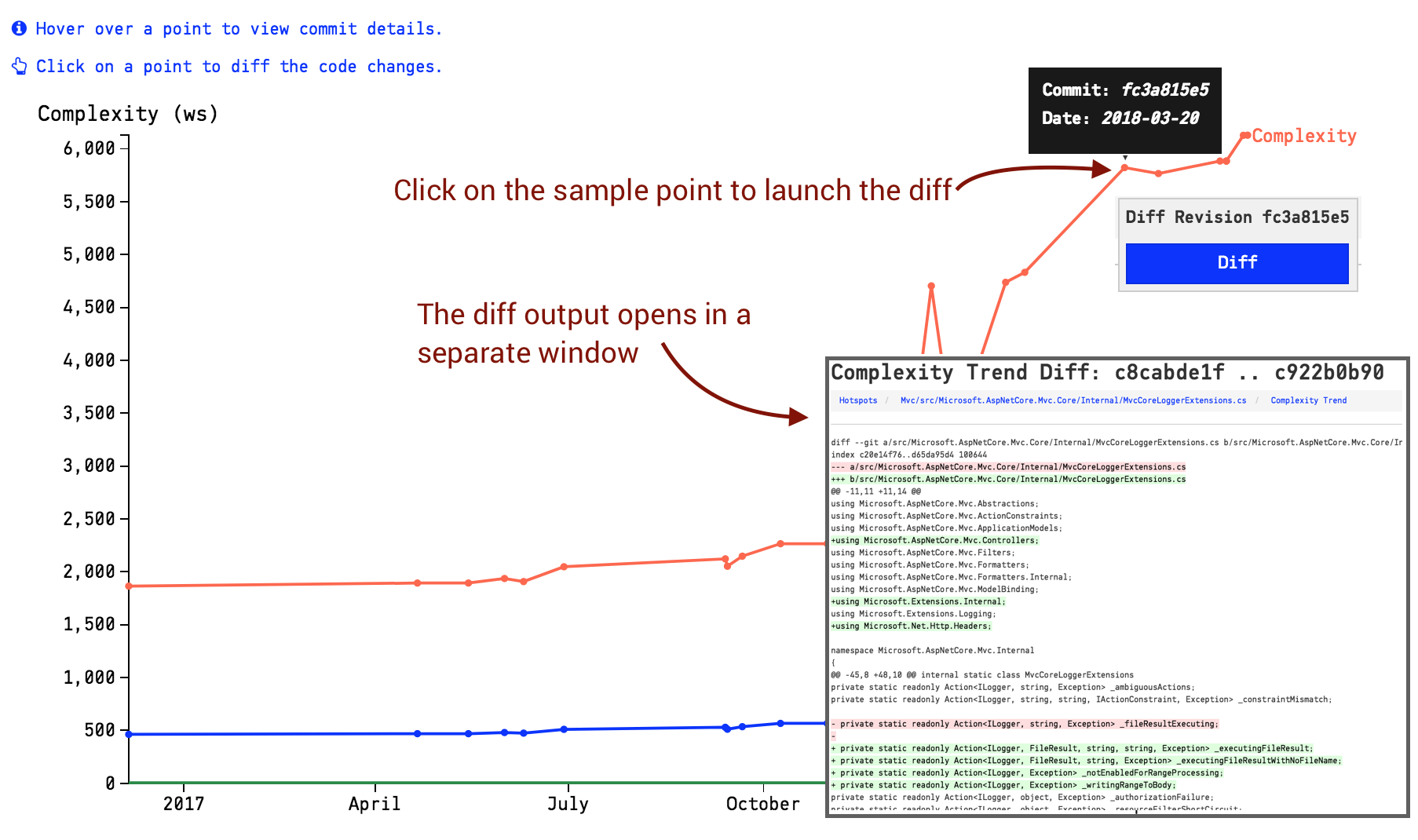

CodeScene provides an automated diff between the sample points in a complexity trend. A diff is a useful investigative tool when you look to explain why a trend suddenly increased or dropped in complexity.

You run a diff by clicking on one of the sample points in the complexity trend, as shown in Fig. 116.

Fig. 116 Diff a complexity trend by clicking on a sample point.¶

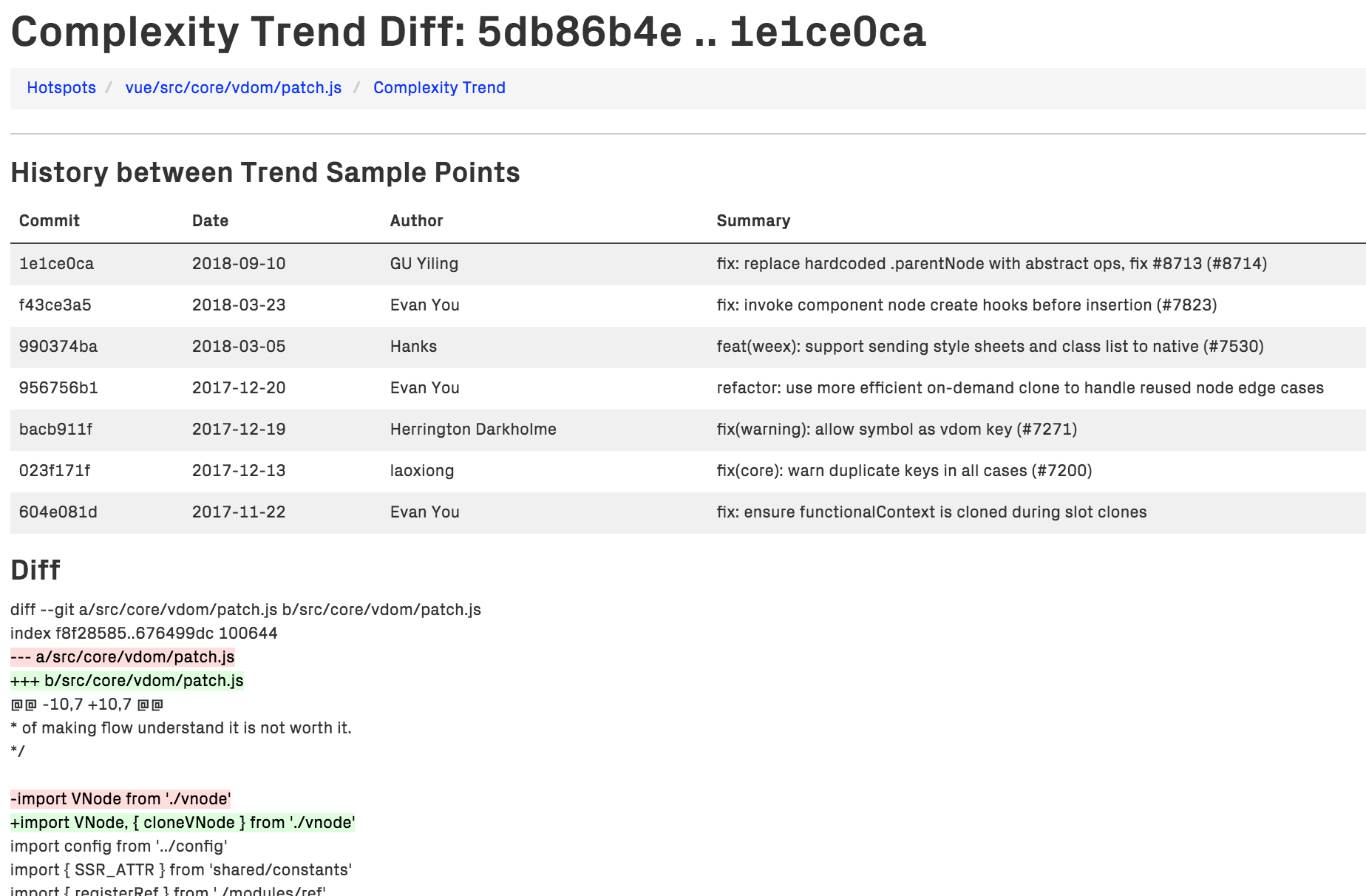

The diff is done between the sample points in the complexity trend graph, which might include several commits. Hence, the diff view includes the history between those samples points as shown in Fig. 117.

Fig. 117 The complexity trend diff includes the history between the samples points.¶

Limitation: Please note that in the current version, CodeScene won’t be able to diff files that have been renamed or moved. This functionality is likely to be added in a future version.

What’s “Complexity” anyway?¶

All right, we said that Complexity Trends calculate the complexity of historic versions of our Hotspots. So what kind of metric do we use for complexity?

The software industry has several well-known metric. You might have heard about Cyclomatic Complexity or Halstead’s volume measurement. These are just two examples. What all complexity metrics have in common, however, are that they are pretty bad at predicting complexity!

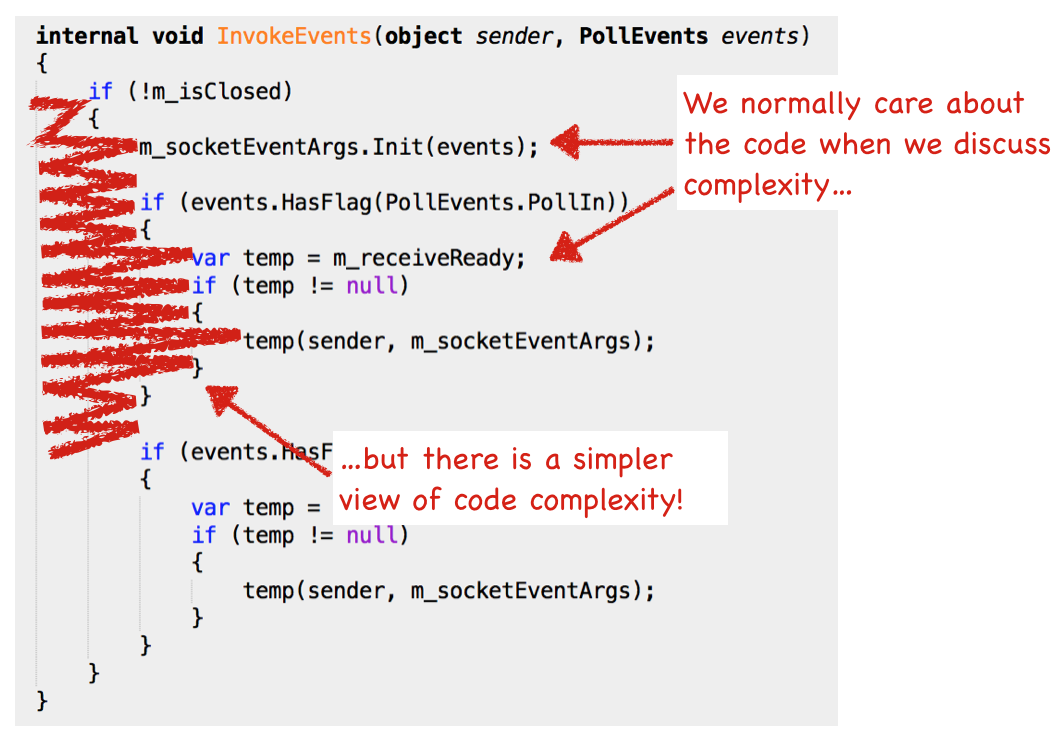

So we’ll use a less known metric, but one that has been shown to correlate well with the more popular metrics. We’ll use indentation-based complexity as illustrated in Fig. 118

Fig. 118 Explaining whitespace complexity.¶

Virtually all programming languages use whitespace as indentation to improve readability. In fact, if you look at some code, any code, you’ll see that there’s a strong correlation between your indentations and the code’s branches and loops. Our indentation-based metric calculates the number of indentations (tabs are translated to spaces) with comments and blank lines stripped away.

Indentation-based complexity gives us a number of advantages:

It’s language-neutral, which means you get the same metric for Java, JavaScript, C++, Clojure, etc. This is important in today’s polyglot codebases.

It’s fast to calculate, which means you don’t have to wait half a day to get your analysis results.

Know the Limitations of Indentation-Based Complexity¶

Of course, there’s no such thing as a perfect complexity metric. Indentation-based complexity has a number of pitfalls and possible biases. Let’s discuss them so that you can keep an eye at them as you interpret the trends in your own code:

Sensitive to layout changes: If you change your indentation style midway through a project, you run the risk of getting biased results. In that case you need to know at what date you made that change and use that when interpreting the results.

Sensitive to individual differences in style: Let’s face it - you want a consistent style within the same module. Inconsistent indentation styles makes it harder to manually scan the code. So please settle on a shared style.

Does not understand complex language constructs: There are certain language constructs that indentation-based complexity will treat as simple although the opposite may hold true. Examples include list compressions and their relatives like the stream API in Java 8 or LINQ in .NET. On the other hand, it’s common to add line breaks and indent those constructs as well.

All right, we’re through this guide on Complexity Trends and you’re ready to explore the patterns in your own codebase. Just remember that, like all models of complex processes, Complexity Trends are an heuristic - not an absolute truth. They still need your expertise and knowledge of the codebase’s context to interpret them.